What Is a Bad Block?

Understanding Bad Block: Definition and Implications

Does your company worry about storage device errors? This post explains the definition of a Bad Block and the implications of its presence. It outlines key metrics for identifying bad blocks and discusses strategies to address these issues. Readers will gain valuable insights to protect data integrity and reduce equipment downtime.

Key Takeaways

- bad blocks cause unreliable data read and write operations on storage sectors

- prolonged device usage and physical damage increase the likelihood of encountering bad blocks

- diagnostic utilities accurately identify error locations and assess operational impact

- scheduled maintenance and monitoring protect data integrity and ensure uninterrupted operations

Defining Bad Block and Its Importance

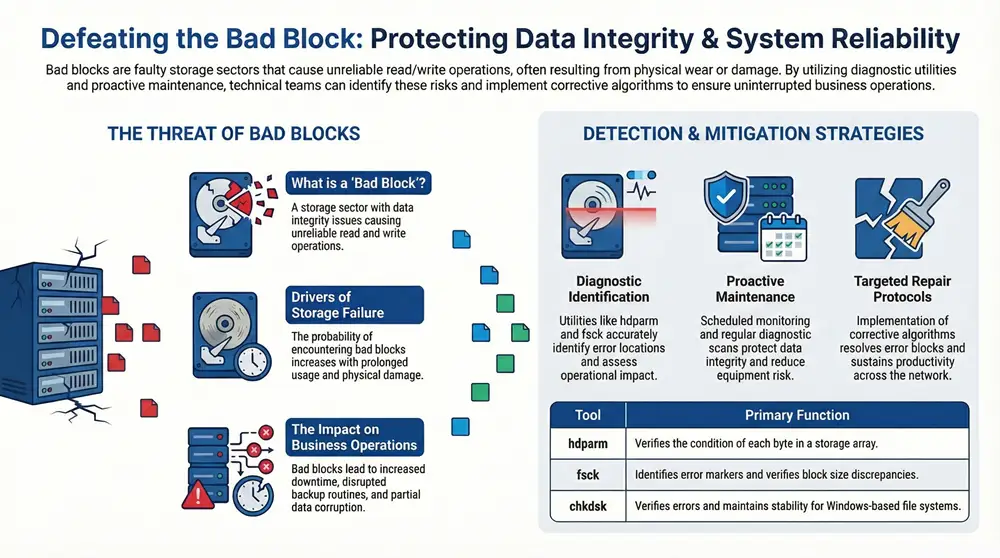

Bad block refers to a sector on a storage device that presents data integrity issues, causing unreliable read and write operations. Intel-based systems often rely on precise diagnostics to flag such issues.

The probability of encountering a bad block increases with prolonged device usage and physical damage. Modern management tools use algorithms to determine the severity of the error at the byte level.

This issue is commonly diagnosed using utilities such as hdparm, which helps verify the condition of each byte in a storage array:

- Identification of the bad sector

- Assessment of failure probability

- Implementation of corrective algorithms

Experts in office equipment supply and repair consider accurate detection critical, as even one malfunctioning byte can compromise system performance. Effective responses minimize downtime and protect valuable data.

Characteristics of a Bad Block

A bad block appears as a faulty storage sector that can disrupt data integrity and cause read/write errors, affecting the overall interface performance.

The issue can impact various file systems such as ext2 and influence operations on platforms like windows 7 and windows 10, which rely on stable storage for optimal performance.

The diagnostic process identifies abnormal sectors and categorizes errors based on distinct criteria:

- Identification of error location

- Assessment of operational impact

- Correlation with operating system vulnerabilities

- Monitoring through browser tools such as firefox

Technical experts observe that bad blocks compromise system reliability, and corrective measures ensure that storage devices perform at optimal efficiency across multiple interfaces.

Implications of Having Bad Blocks in Storage Devices

The presence of bad blocks in storage devices can undermine a system’s reliability by disrupting backup operations and affecting data retrieval. Business systems using Linux and NTFS file systems may encounter reduced performance when a gigabyte of storage is compromised.

This condition leads to increased risk of data loss and partial corruption, impacting backup routines and read/write processes. The issue affects devices connected via SCSI, reducing overall device efficiency and data integrity:

- Detection of affected sectors

- Evaluation of data transfer errors

- Mitigation through targeted repair protocols

The involvement of bad blocks may necessitate more frequent backup intervals to safeguard data. Technical teams can adjust maintenance schedules to ensure data remains secure regardless of quality issues in the storage hardware.

Business operations are impacted as downtime increases due to repair operations triggered by bad blocks. This situation calls for enhanced monitoring in environments using Linux, NTFS, gigabyte-scale storage, and SCSI systems to sustain productivity.

Key Metrics for Identifying Bad Blocks

The fsck tool serves as a primary resource for identifying error markers in storage devices, providing clear insights into block issues with direct verification of block size discrepancies.

Monitoring metrics from manufacturing processes offer a reliable benchmark for comparing observed endurance against expected performance standards, helping pinpoint deviations in block consistency.

Assessment of each thread’s performance in handling data enables technical teams to measure the endurance of storage components accurately, informing decisions based on repeated error trends.

Evaluations of block size alongside routine fsck checks confirm where control measures are necessary, ensuring manufacturing standards and operational endurance are maintained.

Strategies for Addressing Bad Block Issues

The technical team uses dedicated tool software to monitor each block for errors, ensuring intake data remains reliable under microsoft windows environments. The process reduces risk by promptly addressing any detected faults.

Regular diagnostic scans on sata-connected devices detect anomalies in storage blocks. Accurate tools help maintain operational efficiency and protect against potential data risk.

Timely intervention with the appropriate risk mitigation strategies allows the system to resolve error blocks effectively. The tool simplifies troubleshooting on microsoft windows platforms and limits downtime.

Preventive maintenance on sata drives minimizes risk and sustains performance across the network. The proactive use of monitoring tool software fortifies the reliability of each block under high-demand conditions.

Long-Term Effects of Bad Blocks on Data Integrity

Over time, bad blocks can diminish data integrity and affect system performance, leading to persistent issues in storage devices.

The impact extends to various systems, including those running btrfs file systems and utilizing chkdsk for error verification, which may struggle to maintain stability over prolonged usage:

- Loss of reliable read and write operations

- Increased physical wear of storage sectors

- Higher error rates during dvd and geforce-based tasks

Persistent abnormalities in storage can slow down system responsiveness and complicate maintenance tasks for IT teams.

Technical experts find that addressing these long-term effects is crucial for ensuring robust operations and effective risk management across office networks

Frequently Asked Questions

What defines a bad block in storage devices?

A bad block is a storage sector that no longer reliably stores data due to physical damage or wear. Managed IT services monitor such blocks to avoid data loss in office environments using copiers, printers, and scanners.

How do bad blocks affect data integrity?

Bad blocks may disrupt data integrity by causing misreads or corruption, affecting file retrieval and system reliability in managed IT environments.

What key metrics identify a bad block?

Key metrics include excessive error rates, frequent read failures, prolonged write times, and numerous recovery attempts that indicate data inconsistencies and potential hardware faults.

What issues arise from bad blocks?

Bad blocks on storage media may lead to data corruption, system instability, and increased hardware failures, interrupting routine operations and demanding professional diagnostics and repair to maintain performance and reliability.

How can bad block problems be addressed?

Addressing bad block issues involves running diagnostic programs to isolate problematic sectors and replacing the affected hardware components, ensuring office equipment and managed IT services function without disruption.

Conclusion

Bad blocks pose a significant threat to data reliability, highlighting the necessity for vigilant monitoring and timely maintenance. Precise diagnostic tools enable technical teams to identify error-prone sectors and implement effective corrective measures. Consistent care of storage devices preserves data integrity and minimizes system downtime across various platforms. Recognizing the implications of bad blocks drives proactive strategies that protect business operations and optimize overall performance.